Back when I did software development work (secure, data-intensive, interactive Web applications -- .NET and J2EE), I would finish debugging a project on my PC and then deploy the app to a test server from which it would be downloaded by every manner of user. Most of them were running Internet Explorer, some Firefox or Safari, and a few were running some other browser. The problem was that some of these users, users not using the same browser as me, discovered that the app didn't render as expected.

Today, unfortunately, writing dynamic web applications is still a tedious and error-prone process; some developers spend up to 75% of their time working around subtle incompatibilities between web browsers and platforms.

With the widespread adoption of Electronic Health Records (EHR) imminent, the likelihood that a given Web app will be downloaded by someone using a browser other than the one(s) used to develop and test the app will only increase.

But help may be on the way: cross browser Web development may be getting easier and more efficient with the upcoming version of Google Web Toolkit (GWT) version 2. Google claims that GWT applications will automatically support Internet Explorer, Firefox, Mozilla, Safari, and Opera with no browser detection or special-casing within your code in most cases.

Shown in the figure below is the GWT implementation of an app that would not be expected to render exactly the same in Internet Explorer and Firefox.

Note: A few examples of cross-browser incompatibility appear in the figures of my 2005 article ASP.NET & Multiplatform Environments (a link to it is provided in the bibliography at the bottom of this blog).

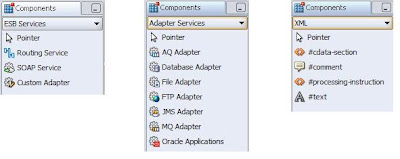

GWT is an interesting platform that allows you to write Java code that automatically gets converted into JavaScript by the GWT compiler. This allows for a simple way to create AJAX apps, using Java. Google also created a plug-in for Eclipse to make debugging and deploying very easy.

With Ajax, Web applications can retrieve data from the server asynchronously in the background without interfering with the display and behavior of the existing page. The use of Ajax has led to better quality of Web services [see my June 28 post] due to the asynchronous mode.

The core language of the World Wide Web

Until recently, most simple browser applications were created in HTML 4.1, which could be enhanced with JavaScript and CSS. For Web applications that were as rich as desktop applications, developers had to embed Adobe Flash or, more recently, Microsoft Silverlight apps in their HTML code. But, a new version of HTML, HTML 5, which is the fifth major revision of the core language of the World Wide Web, is bringing this same rich functionality to the browser without the need for Flash or Silverlight.

A good summary of the major features of HTML 5 is in Tim O'Reilly's blog post about it.

http://radar.oreilly.com/2009/05/google-bets-big-on-html-5.html

However, there is still a problem. Currently HTML 5 compliant browsers are only 60% of the market. Quite a few enterprises are still on Internet Explorer 6. So, Flash, which has the distinct advantage of working on older browsers and has about a 95% market penetration, is still very popular. However, some expect that HTML 5 will be as popular as Flash by 2011.

Even though HTML 5 is not yet a finished standard, most of it is already supported in major browsers: Firefox 3, Internet Explorer 8, and Safari 4. This means that you can create a HTML 5 application right now!

Note: Flex, like ActiveX, Silverlight, and Java Applets before them are, in a sense, replacements for the browser. But, each replaces the web browser in a proprietary way.

HTML 5 is right around the corner

While the entire HTML 5 standard is years or more from adoption, there are many of its powerful features available in browsers today. In fact, five key next-generation features are already available in the latest (sometimes experimental) browser builds from Firefox, Opera, Safari, and Google Chrome. Microsoft has announced that it will support HTML 5, but as Vic Gundotra, VP of Engineering at Google has noted, "We eagerly await evidence of that."

GWT meets HTML 5

Google Web Toolkit is a really good way to "easily" design a flashy HTML/CSS/JavaScript application that works as expected across different browsers and operating systems.

{click for larger view}